Keywords: VoIP, IP telephony, voice compression

1 Introduction The Internet has shown an explosive growth trend globally, and it has quickly integrated the entire society into the information wave. Its main business has changed from traditional file transfer, e-mail and remote login and other basic services to multimedia represented by VoIP service. VoIP (VoiceOver IP) refers to digitizing the analog voice signal, adding the IP address header according to certain rules after segment compression, and routing or switching to the destination address through the IP network, and then the IP packet is restored to the voice signal through the reverse process . The technology involved in VoIP is relatively complicated, especially the development of several technologies including packet voice technology, voice coding and compression technology is the most critical.

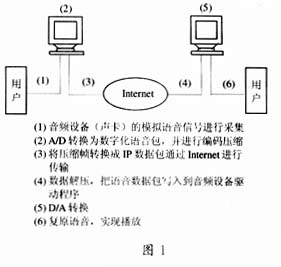

2 System Overview This system is developed on the Windows platform based on Visual C ++ and InternetProtocol. It uses the existing full-duplex sound card and Internet network to implement PCto PC calls. The entire system can be divided into several parts. First, Collect the analog voice signal of the audio equipment (sound card), convert it into digital voice packets through A / D (modulus); then, use certain coding and compression technology to compress the voice data packets; third, according to certain packaging rules The compressed frames are converted into IP data packets for transmission through the data network; fourth, the data is decompressed at the destination; fifth, the voice data packets are written to the audio device driver; finally, the D / A (digital-analog) conversion is restored Realize the playback of voice, so as to achieve the purpose of voice communication. The entire process is shown in Figure 1.

3 system implementation

3.1 Voice processing The entire process of voice data processing can be divided into two parts: A / D conversion, which converts the analog input of the original sound into digital information; D / A conversion, which converts digital information into analog data. During the call, the most direct contact with the user is speaking and listening, so the quality of voice data processing directly affects the success or failure of the system.

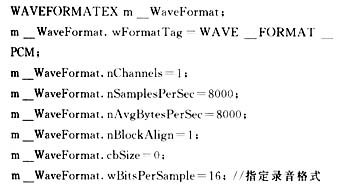

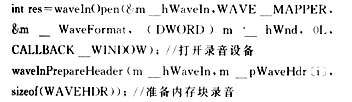

This system is to convert the voice directly into data and put it in the memory instead of saving it as a voice file, and when playing the voice, it also directly plays the voice data instead of playing the voice file. This has the advantage of omitting the time-consuming operation of reading and writing the hard disk and improving the real-time nature of voice calls. To complete the above voice operations, the easy-to-use advanced multimedia voice functions provided in the programming language are incapable, and can only be implemented through the multimedia low-level audio service in the Windows MDK (MulTImedia Development Kit). The names of such functions and structures are generally Both are prefixed with "wave". The main operation of recording or playing audio data under Windows is to read out the audio data to and write from the audio device driver. The low-level waveform audio function controls the audio data of the device driver through the audio data block of the WAVEHDR structure. Taking recording as an example, there are several major preparations. Open the recording device, obtain the recording handle, specify the recording format, and allocate some memory for recording. When starting recording, first provide all the memory blocks to the recording device for recording. The recording device will write the voice data to the memory in turn. When a memory is full, the recording device will send a Window message MM WIM DATA to the corresponding The window notifies the program for related processing. At this time, the normal processing of the program is to copy the data in the memory, such as writing to a file, etc. Here, our processing is to compress the data and send it over the network, and then empty the memory, Return to the recording device for recording, so that a continuous recording process is formed. When the recording is finished, all memory blocks are released and the recording device is turned off. The key recording functions and sequence are as follows:

Developers can make full use of the multitasking mechanism of the Windows operating system, perform real-time audio processing on the sampled data while sampling the original sound, and play the processed audio data in real time, making the recording, audio processing, and playback three originally independent The process is asynchronous and parallel processing to achieve the real-time effect of the original sound sampling and the synchronous playback of the processed sound.

In the development of multimedia low-level audio, the amount of audio data is generally large, and the application must continuously provide audio data blocks to the device driver to ensure the continuous recording or playback. Because the specific operations of recording and playback are performed by the device driver controlling the audio hardware in the background, the application must detect when one data block is used up and transmit the next data block in time to avoid playback pause and loss of recording information . The Callback Mechanism in the low-level audio service provides us with a method to detect the usage of audio data blocks. The so-called callback mechanism is to specify an event, function, thread or window as the callback object by specifying the fdwOpen parameter of the device opening function (waveIn Open () or waveOutOpen ()) when the audio device is opened. The dwCallback parameter will indicate the object handle or Function address. The device driver continuously sends messages to the callback object to notify the processing status of the audio data block, and the user program responds to these messages in the window processing process or callback function and makes corresponding processing.

3.2 Voice compression The traditional telephone network transmits voice in a circuit-switched manner, and it requires a basic bandwidth of 64 kbit / s. To transmit voice on an IP-based packet network, it is necessary to perform special processing on the analog voice signal, so that the processed signal can be suitable for transmission on a connection-free packet network. This technology is called packet voice technology. Voice compression is an important part of packet voice system. At present, the maximum rate of access to the network through a modem is 56kbit / s, which is far from being able to meet the requirements of multimedia communications. Although there are faster access technologies such as ISDN and ADSL, they are not popular after all. Therefore, voice compression algorithms must be used to process voice, and from the perspective of saving network bandwidth, voice compression is also very necessary.

Audio data is the main way for most multimedia applications to provide information to users. These data generally have a high sampling rate. If they are not compressed, saving them requires a lot of storage space, and the efficiency of transmission on the network is also Very low, so audio digital compression coding occupies a very important position in multimedia technology. At present, there are many kinds of compression methods. Different methods have different compression ratios and restore sound quality. The encoding formats and algorithms are also different. Some of the compression algorithms are quite complicated, and it is impossible for ordinary programs to implement their encoding and decoding algorithms. Fortunately, Windows 9x / NT 4.0 / Windows 2000 provides powerful support for multimedia applications, introducing ACM (Audio Compression Manager, audio compression manager), which is responsible for managing all audio codecs (Coder- Decoder, referred to as CODEC, is a driver that implements audio data encoding and decoding), the application can call the existing codecs in these systems through the programming interface provided by ACM to achieve the compression and decompression of audio data. This type of function and structure The names are generally prefixed with "ACM". The audio COD ECs of Windows 9x / NT 4.0 / 2000 systems support some audio data compression standards, such as Microsoft ADPCM, Microsoft InteracTIve MulTIme dia Asso ci aTIon (I MA) ADPCM, DSP GroupTrueSpeech (TM), etc. This system tests and compares four representative compression standards: MSADPCM, IMA ADP CM, MS GSM 6.10, and DSP GroupTrueSpeech (TM). From the perspective of compression ratio, MSA DPCM and IMA ADPCM are 4: 1, MSGSM 6.10 is 2: 1, and DSPGroup TrueSpeech (TM) has reached 10: 1. Judging from the effect after restoration, MSGSM 6.10 should be better, and it supports a relatively high sampling frequency, but its compression rate is too small, we still abandoned it. The DSPGroup TrueSpeech (TM) has a very high compression rate, which greatly reduces the bandwidth requirements, and is suitable for transmission on the Internet, and the effect after the restoration is OK, so I chose it in the end.

3.3 Voice transmission The system uses TCP / IP protocol communication based on Socket to realize real-time communication by establishing a connection between the computers at both ends in the network transmission layer. In order to facilitate development on the Windows platform, Microsoft has introduced a set of C. The popular Socket interface in Berkeley University's BSD UNIX is an example network programming interface Windows Sockets. It contains not only Berkeley Sokete style library functions that people are familiar with, but also a set of extended library functions for Windows, so that programmers can make full use of the Windows message-driven mechanism for programming. According to the different types of data transmitted, Windows Sockets can be divided into two types: Data Stream Socket (SOCK STREAM) and Datagram Socket (SOCK-DGRAM). The data stream Socket provides bidirectional, orderly, error-free, non-repetitive, and is a data stream service with no record boundary. The TCP / IP protocol uses this type of interface. The datagram socket provides bidirectional, but it is not guaranteed to be a reliable, orderly, and non-repetitive data flow service, that is, a process that receives information from the datagram socket may find that the information is duplicated, or in the order it was sent different.

According to the above analysis, the data stream Socket is used in the implementation of this system. By establishing a two-way transmission connection on two PCs, the real-time error-free transmission of audio data can be ensured. The specific work is this, first inherited two subclasses CSocketListenThread, CMySocketThread from CWinThread. The first class of work is some initialization work and monitoring whether there is a Socket request connection, which is a continuous looping process. The work of the second class is that if a Socket request comes, then a Socket is assigned to this request in this class to establish a connection. At the same time, several auxiliary functions are defined in the second class to facilitate the triggering of events. The most typical ones are ReadFromSocket () and SendToSocket (), which are used to receive and send respectively. Their implementation is mainly through the call of the underlying Windows APIs function. With these two classes, we can complete the connection, receiving and sending of Socket.

It should also be pointed out that the version used by this system is Windows Socket2. Compared to Windows Socket1.1, Win Socket2 provides fast, multi-threaded data transmission capabilities, more advanced performance, and supports multiple networks. Consistent access to transmission methods and protocol-independent multipoint transmission / multicast. More importantly, it provides an interface for negotiating QoS (Quality of Service) on new network media (ATM, ISD N, etc.). In this way, the multimedia application developer can request the specified transmission rate, and can establish or tear down the connection according to the throughput of the transmission. When the network is temporarily unavailable, the application should automatically be prompted. Based on the above considerations, we chose Windows Socket2 to achieve the transmission of network data. Although the implementation process is relatively complicated, it brings good performance and scalability to the system.

4 Conclusion Internet Internet is the most widely used and fastest-growing communication network today. This is a data packet switching method based on the data packet method. User data is encapsulated in a packet, and the packet also contains some additional information for routing, error correction, and flow control in the network. Data packets are transmitted independently in the network. Due to changes in network conditions and different paths, the time for data packets to arrive at the destination is not fixed and non-real-time. Generally speaking, the Internet is more suitable for data transmission, but We use some existing technologies to make the audio signal can be transmitted as data on the Internet after analog-to-digital conversion. Since the data network is distributed in a statistical time division manner and uses network resources, no communication entity can monopolize a certain channel, so packet voice technology can greatly improve the utilization of network resources.

It should also be noted that the current VoIP technology has some shortcomings, such as poor call quality. Since the Internet is designed for data communication purposes, the communication method is implemented by means of packet transmission. When voice packets are transmitted in a network without service quality assurance, the arrival sequence of the packets will be misaligned, resulting in network jitter. Voice distortion and voice packet loss occur. Therefore, the intermittent phenomenon is inevitable under the current technology when using IPPhone to talk, and there are certain problems in sound quality, fluency and delay. In addition, the compression technology needs to be improved. Although there are many current compression standards, there is still room for improvement in how to achieve a good balance between the compression rate and the sound restoration quality.

However, I believe that with the continuous development of technology and the acceleration of the process of network unification, the combination between the data network and the telecommunications network is imperative, and CTI (Computer Telephony Integration) technology will increasingly reflect its value.

2 Zhou Jingli, Yu Shengsheng. Multimedia computer sound card technology and application. Beijing: Electronic Industry Press, 1998

3 Pan Aimin, Wang Guoyin. VisualC ++ technology insider. Beijing: Tsinghua University Press, 1999

4 Translated by Liu Suli, Li Tonghong, etc. Internet programming. Beijing: Electronic Industry Press, 1996

5 Hou Junjie. Simple explanation of MFC. Wuhan: Huazhong University of Science and Technology Press, 2001

6 Miao Lanbo, Feng Zhiyong, Lu Tingjie. IP telephone network technology. Beijing: Electronic Industry Press, 2001

OCTC Power Transformer, Solar Transformer, High Quality Solar Transformer, Solar Photovoltaic Transformer

Hangzhou Qiantang River Electric Group Co., Ltd.(QRE) , https://www.qretransformer.com