0 Preface

With the extensive use of high and new technology in the military field, weapons and equipment are gradually developing in a high, precise and sharp direction. Due to the long training time, high training cost and narrow training space, traditional military training often fails to achieve the expected training effect, which can not meet the needs of modern military training. In order to solve the above problems, simulation training came into being.

In order to further improve the training effect, this paper uses the intelligent voice interaction chip to design a teaching and playback system of a simulation trainer. The teaching system vividly demonstrates the standard operating procedures and corresponding operating phenomena for the operator, greatly shortening the training time for the operator and improving the training effect. The playback system records the password, sound intensity, motion, time, operation phenomenon, etc. of each operator during the training process, and repeats the training process after the end of the training, so that the operator can correct his problem in time. The teaching system can also be understood as a playback of the standard operating training process. The system does not require the support of virtual reality technology and can be implemented on a small embedded system.

1 System principle

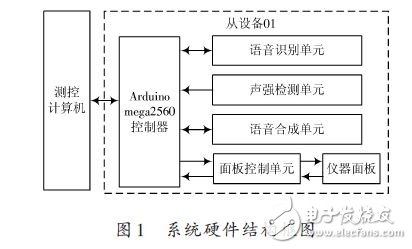

The simulation trainer consists of a measurement and control computer and multiple slave devices. As shown in Figure 1. Here, only one slave device is introduced. The hardware system is mainly composed of a measurement and control computer, an Arduino mega2560 controller, a voice recognition unit, a sound intensity detection unit, a voice synthesis unit, a panel control unit, and an instrument panel. The panel control unit is more complex and contains a variety of control circuits. In the simulation training, the slave device is responsible for completing the entire training process under the control of the Arduinomega2560 controller, and repeats the phenomenon of the training operation just performed in the teaching and playback system. The specific circuit design is not introduced here.

The voice recognition unit is responsible for identifying the operation password of the operator; the sound intensity detection unit is responsible for detecting the sound intensity and using this as a basis for judging which slave operator password is used; the Arduino mega2560 controller is responsible for monitoring the status of each component of the instrument panel to identify The operator's actions complete the recording of the operational training process. The operation phenomenon of each instrument is prepared in advance according to the operation and operation without recording. During operation playback, the measurement and control computer reproduces the recorded operation process by controlling the corresponding slave device's Arduino mega2560 controller based on the recorded data.

2 unit system design

2.1 Speech recognition unit design

At present, the development of speech recognition technology is very rapid, and can be divided into specific person and non-specific person speech recognition according to the type of recognition object. A specific person refers to a person whose identification object is a special person. A non-specific person means that the recognition object is for most users. Generally, it is necessary to collect voices of multiple people for recording and training, and after learning, a higher recognition rate is achieved.

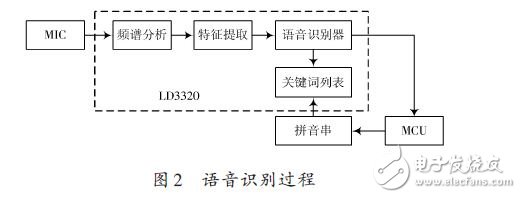

The LD3320 speech recognition chip used in this paper is a chip based on Speaker? Independent AutomaTIc Speech Recognition TI (SI? ASR) technology. The chip integrates high-precision A/D and D/A interfaces, eliminating the need for external auxiliary FLASH and RAM, which enables voice recognition, voice control, and human-machine dialogue. It provides a true single-chip voice recognition solution. . And, the identified keyword list is dynamically editable. The speech recognition process is shown in Figure 2.

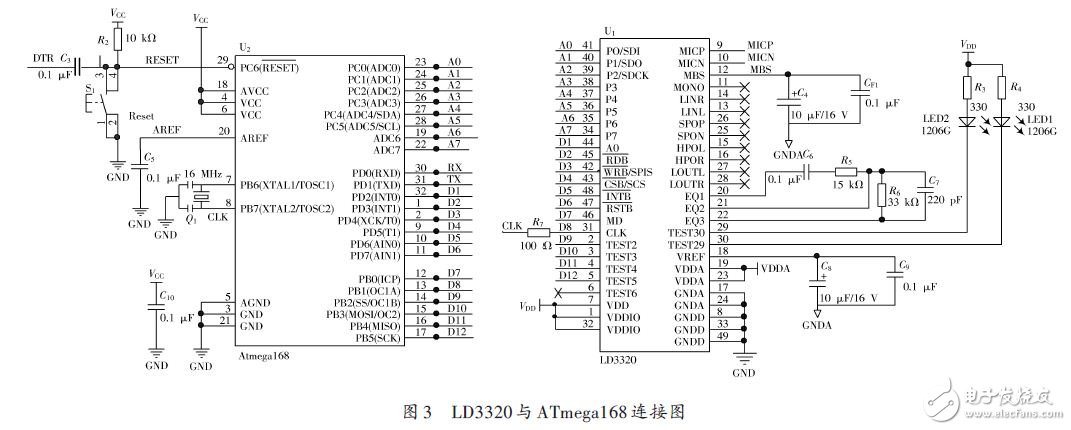

The speech recognition unit uses ATmega168 as the MCU, which is responsible for controlling the LD3320 to perform all the work related to speech recognition, and uploading the recognition result to the Arduino mega2560 controller through the serial port. The various operations of the LD3320 chip must be completed by register operations. There are two ways to read and write registers (standard parallel mode and serial SPI mode). In this parallel mode, the data port of the LD3320 is connected to the I/O port of the MCU. Its hardware connection diagram is shown in Figure 3.

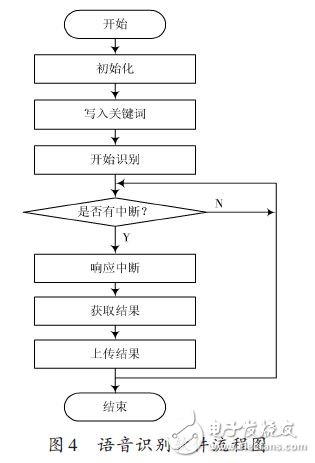

The speech recognition process works in an interrupt mode, and its workflow is divided into initialization, writing keywords, starting recognition, and responding to interrupts. The MCU program is written by ARDUINO IDE [5]. After the debugging is completed, the serial port is used for burning, the LD3320 is controlled to complete the speech recognition, and the recognition result is uploaded to the Arduino mega2560 controller. The software flow is shown in Figure 4.

Tourist Car,Tourist Vehicle,Electric Sightseeing Car,Semi-Closed Electric Vehicle

Jinan Huajiang environmental protection and energy saving Technology Co., Ltd , https://www.hjnewenergy.com